A/B testing — putting two or more versions out in front of users and seeing which impacts your key metrics — is exciting. The ability to make decisions on data that lead to positive business outcomes is what we all want to do.

Though when it comes to A/B testing, there is far more than meets the eye. A/B testing is not as simple as it’s advertised, i.e. “change a button from blue to green and see a lift in your favorite metric”.

The unfortunate reality of A/B testing is that in the beginning, most tests are not going to show positive results. Teams that start testing often won’t find any statistically significant changes in the first several tests they run.

Like picking up any new strategy, you need to learn how to crawl before you can learn how to run. To get positive results from A/B testing, you must understand how to run well-designed experiments. This takes time and knowledge, and a few failed experiments along the way.

In this post, I’ll dive into what it takes to design a successful experiment that actually impacts your metrics.

Setting Yourself Up for Success

First up: Beyond having the right technology in place, you also need to understand the data you’re collecting, have the business smarts to see where you can drive impact for your app, the creative mind and process to come up with the right solutions, and the engineering capabilities to act on this.

All of this is crucial for success when it comes to designing and running experiments.

Impact through testing does not happen on a single test. It’s an ongoing process that needs a long-term vision and commitment. There are hardly any quick wins or low-hanging fruit when it comes to A/B testing. You need to set yourself up for success, and that means having all those different roles or stakeholders bought into your A/B testing efforts and a solid process to design successful experiments. So, before you get started with A/B testing, you need to have your Campaign Management strategy in place.

When you have this in place, you’re ready to start. So how do you design a good experiment?

Designing an Experiment

The first step: Create the proper framework for experimentation. The goal of experimentation is not simply to find out “which version works better,” but determine the best solution for our users and our business.

In technology, especially in mobile technology, this is an ongoing process. Devices, apps, features, and users change constantly. Therefore, the solutions you’re providing for your users are ever-changing.

Finding the Problem

The basics of experimentation starts — and this may sound cliché — with real problems. It’s hard to fix something that is not broken or is not a significant part of your users’ experience. Problems can be found where you have the opportunity to create value, remove blockers, or create delight.

The starting point of every experiment is a validated pain point. Long before any technical solution, you need to understand the problem you chose to experiment with. Ask yourself:

- What problems do your users face?

- What problems does your business face?

- Why are these problems?

- What proof do have that shows these are problems? Think surveys, gaps or drops in your funnel, business cost, app reviews, support tickets etc. If you do not have any data to show that something is a problem, it’s probably not the right problem to focus on.

Finding Solutions (Yeah, Multiple)

Once the problem is validated, you can jump to a solution. I won’t lie, quite often you will already have a solution in mind, even before you’ve properly defined the problem. Solutions are fun and exciting. However, push yourself to first understand the problem, as this is crucial to not just find a solution but finding the right solution.

Inexperienced teams often run their first experiments with the first solution they could think of: “This might work, let’s test it.” they say.

But they don’t have a clear decision-making framework in place. Often, these quick tests don’t yield positive results. Stakeholders in the business lose trust in the process and it becomes harder to convince your colleagues that testing is a valuable practice.

My framework goes as follows.

- Brainstorm a handful of potential solutions. Not just variants — completely different ways to solve the problem for your users within your product.

- Out of this list of eight, grab two-to-three solutions that you’ll mark as “most promising.” These can be based on gut feeling, technically feasible, time/resources, or data.

- Now for these two most likely solutions, find up to four variants for each of these solutions.

This process takes you from the one-set solution you started with to test against the control, to a range of about 10 solutions and variations that can help you bring positive results. In an hour of work, you increase your chances to create a winning experiment significantly.

Now you have your solutions, we’re almost ready to start the experiment. But first…

Defining Success

We now have a problem and have a set of solutions with different variants. This means we have an expected outcome. What are we expecting to happen when we run the test and look at the results?

Before you launch your test, you need to define upfront what success will look like. Most successful teams have something that looks like this:

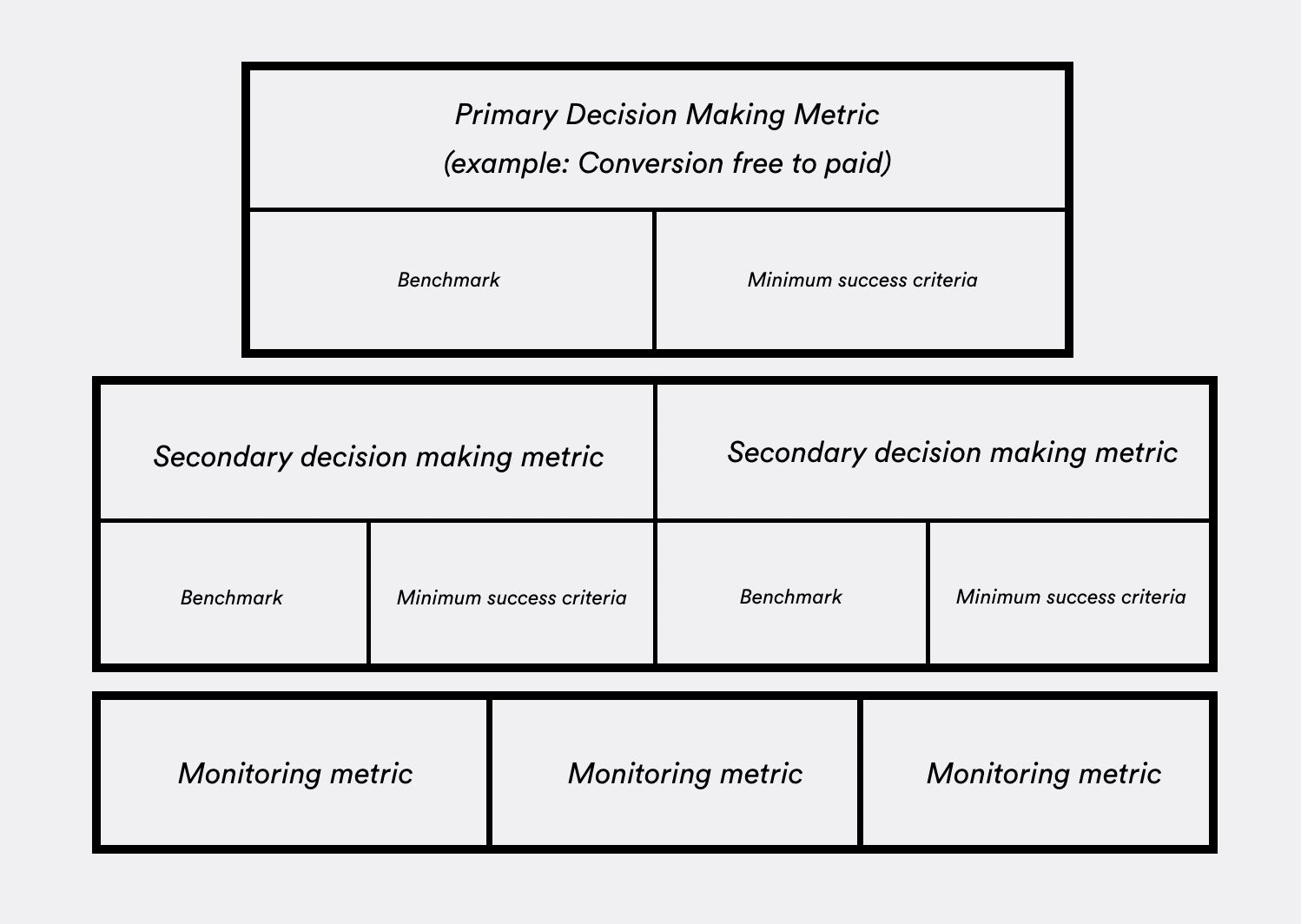

- Primarily decision-making metric: The primary decision-making metric is the goal metric that you want to impact with your test. It’s the single most important user behavior you want to improve.

- Secondary decision-making metrics: These are often two-to-three metrics. They are directly impacted by the experiment, but aren’t the most important metric. The secondary metrics create context for the primary decision-making metric, and help us make the right decisions. Even if the primary metric is positive, but there is too much of a decline in the secondary metrics, this could impact your decision if the experiment was a success or not.

- Monitoring metric: These are extremely important. You don’t use them to make a decision on the success of the outcome of the experiment, but on the health of the environment of the experiment.

With an A/B test, we want to have a controlled environment where we can decide if the variant we created has a positive outcome. Therefore, we need monitoring metrics to ensure the environment of our experiment is healthy. This could be acquisition data, app crash data, version control, and even external press coverage.

Setting the Minimum Success Criteria

Alongside the predefined metrics on which you’ll measure the success of your experiment, you need a clear minimum success criteria. This means setting a defined uplift that you consider successful. Is an increase of 10 percent or 0.5 percent needed to be satisfied about the problem we’re trying to solve?

Since the goal of running an experiment is to make a decision, this criteria is essential to define. As humans, we’re always easily persuaded. If we don’t define upfront what success looks like, we may be too easily satisfied.

For example: If you run a test and see a two percent increase on your primary decision-making metric, is that result good enough? If you did not define a success criteria upfront, you might make the decision that this is okay and roll out the variant to the full audience.

However, as we have many different solutions still on the backlog, we have the opportunity to continue our experimentation and find the best solution for the problem. Success criteria help you to stay honest and ensure you find the best solution for your users and your business.

Share Learnings With Your Team

Finally, share your learnings. Be mindful here that sometimes learnings come from a combination of experiments where you optimized toward the best solution.

When you share your learnings internally, make sure that you document them well and share with the full context — how you defined and validated your problem, decided on your solution, and chose your metrics.

My advice would be to find a standard template that you can easily fill out and share internally. Personally, I like to keep an experiment tracker. This allows you to document every step and share the positive outcomes and learnings.

Creating a Mobile A/B Testing Framework That Lasts

All this is a lot of work — and it’s not always easy. Setting up your framework for experimentation will take trial, error, education, and time! But it’s worth it. If you skip any of the above steps and your experiment fails, you do not know where or why it failed and you are basically guessing again. We all know the notion of “Move fast and break things,” but spending a day extra to set up a proper test that gives the right results and is part of a bigger plan is absolutely worth it.

And don’t worry, you’ll still break plenty of things. Most experiments are failures and that is fine. It’s ok to impact a metric badly with an experiment. Breaking things mean that you’re learning and touching a valuable part of the app. This is the whole reason why you run an experiment, to see if something works better. Sometimes that is not the case… As long as you have well-defined experiment framework, you can justify why this happened and you can set-up a follow-up experiment that will help you find a positive outcome.

—

Leanplum is a mobile engagement platform that helps forward-looking brands like Grab, IMVU, and Tesco meet the real-time needs of their customers. Schedule your personalized demo here.